-

Notifications

You must be signed in to change notification settings - Fork 271

add implementation of a generic memory two + 2 strategies #1171

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

add implementation of a generic memory two + 2 strategies #1171

Conversation

Close #1063 A generic class for memory two players, similar to the MemoryOnePlayer class. If transition rates are not given the the player is set to default which is Cooperator. I have added the equivilent tests and a reference from issue #1063. Moreover, this implements two strategies of interest from #1063: - AON2 and Delayed AON1 Each strategy has 16 states. I have added tests for most of the states.

|

Note that in the paper Memory-n strategies of direct reciprocity there are:

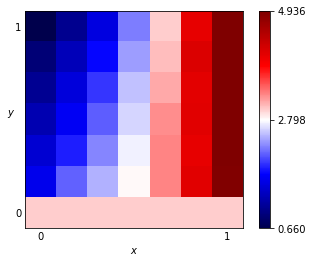

The fingerprints appear to be similar. |

|

Finally the tests are going to fail because of: which is because of the type hints. I am not really sure why it's breaking 😅 I would appreciate some help. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Some minor requests from me @Nikoleta-v3, will take a look at the type hints now.

| @@ -57,7 +57,7 @@ | |||

| MemoryOnePlayer, ALLCorALLD, FirmButFair, GTFT, SoftJoss, | |||

| StochasticCooperator, StochasticWSLS, WinStayLoseShift, WinShiftLoseStay, | |||

| ReactivePlayer) | |||

| from .memorytwo import MEM2 | |||

| from .memorytwo import MemoryTwoPlayer, AON2, DelayedAON1, MEM2 | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

AON2 and DelayedAON1 need to be added to the all_players list.

axelrod/strategies/memorytwo.py

Outdated

| 'manipulates_state': False | ||

| } | ||

|

|

||

| def __init__(self, initial: Action = C) -> None: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As these strategies are defined in the literature, do we need initial to be an option?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I agree, but there are many strategies in the library (from the literature) already which have initial as an option.

Axelrod/axelrod/strategies/memoryone.py

Lines 98 to 123 in 5e09643

| class WinStayLoseShift(MemoryOnePlayer): | |

| """ | |

| Win-Stay Lose-Shift, also called Pavlov. | |

| Names: | |

| - Win Stay Lose Shift: [Nowak1993]_ | |

| - WSLS: [Stewart2012]_ | |

| - Pavlov: [Kraines1989]_ | |

| """ | |

| name = 'Win-Stay Lose-Shift' | |

| classifier = { | |

| 'memory_depth': 1, # Memory-one Four-Vector | |

| 'stochastic': False, | |

| 'makes_use_of': set(), | |

| 'long_run_time': False, | |

| 'inspects_source': False, | |

| 'manipulates_source': False, | |

| 'manipulates_state': False | |

| } | |

| def __init__(self, initial: Action = C) -> None: | |

| four_vector = (1, 0, 0, 1) | |

| super().__init__(four_vector) | |

| self._initial = initial |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure but in this case, they're very specific strategies and we go down the line of needing two initial moves to be truly generic and then probably overwriting the repr method to not display them as they're defined in the literature?

I suggest just removing the option for these two strategies. (If a user really wants to they can always inherit and overwrite.)

axelrod/strategies/memorytwo.py

Outdated

| In essence is a strategy that starts of by cooperating and will cooperate | ||

| again only after the states (CC, CC), (CD, CD), (CD, DD), (DD, CD), | ||

| (DC, DC) and (DD, DD). | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could we specifically write the vector here and also write the other vectors reported as equivalent (but different) in [Hilbe2017] (mentioning the reported self cooperation rate?).

Same for AON2.

Add information about the vectors which are reported as equivilent. I am not sure I can say that they are actually different in the documentation without saying why and without adding the fingerprints.

@Nikoleta-v3 I've opened https://github.com/Nikoleta-v3/Axelrod/pull/8 that fixes the type hint issue (for me). I've essentially been less specific in some places with the hints and been explicit in the |

axelrod/strategies/memorytwo.py

Outdated

|

|

||

| states = [(hist[:2], hist[2:]) for hist in list(itertools.product((C, D), repeat=4))] | ||

|

|

||

| self._sixteen_vector = dict(zip(states, map(float, sixteen_vector))) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think the automatically derived type here is not correct. Maybe if you wrap map(...) with tuple(...) it will work since it won't be a list of tuples until actually used in a list/tuple context, which the type checker may not be able to determine.

Alternatively you could explicitly set the type (perhaps better to do in the __init__ function probably).

axelrod/strategies/memorytwo.py

Outdated

| rounds in which the focal player cooperated while at least one coplayer defected, | ||

| the strategy responds by defecting the following k rounds. | ||

|

|

||

| In [Hilbe2017]_ the following vectors are reported as equivalent to AON2 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

(Very minor request:)

reported as "equivalent" ... cooperation rate (note that these a not the same)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

(Same for the other one).

| self.assertFalse(self.p1.classifier['stochastic']) | ||

| self.assertFalse(self.p2.classifier['stochastic']) | ||

|

|

||

| class TestMemoryTwoPlayer(unittest.TestCase): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@Nikoleta-v3 could we add some tests of the player with stochastic sixteen_vector (so using TestPlayer.versus_test with init_kwargs). Seeded tests, including same history/different seed to show that we indeed have stochastic behaviour?

| self.assertEqual(self.p2.name, | ||

| "Generic Memory Two Player: (1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0)") | ||

|

|

||

| def test_stochastic_classification(self): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we rename this to test_deterministic_classification and then also include a test_stochastic_classification where we use sixteen_vector where the values aren't all in [0, 1].

| states = [(hist[:2], hist[2:]) | ||

| for hist in list(itertools.product((C, D), repeat=4))] | ||

|

|

||

| self._sixteen_vector = dict(zip(states, sixteen_vector)) # type: Dict[tuple, float] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@marcharper this no longer maps float on to states, it's a change I suggested to @Nikoleta-v3 when trying to get the type hints to work https://github.com/Nikoleta-v3/Axelrod/pull/8

I couldn't see why we needed to map(float but we do do it for memory_one so just highlighting in case this is something I've forgotten/missed (if indeed it's not needed, let's get rid of it from memory_one too).

Added tests against two opponents. For different seed the behaviour of the memory two strategy changes.

axelrod/strategies/memorytwo.py

Outdated

| 4. [1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1], self cooperation | ||

| rate: 0.952 | ||

|

|

||

| AON2 is implemented using vector 1 due it's self cooperation rate. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: it's --> its

axelrod/strategies/memorytwo.py

Outdated

| @@ -148,6 +162,18 @@ class DelayedAON1(MemoryTwoPlayer): | |||

| Delayed AON1 a memory two strategy also introduced in [Hilbe2017]_ and belongs to the | |||

| AONk family. Note that AON1 is equivalent to Win Stay Lose Swift. | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Swift -> Shift

axelrod/strategies/memorytwo.py

Outdated

| class MemoryTwoPlayer(Player): | ||

| """ | ||

| Uses a sixteen-vector for strategies based on the last two rounds of play, | ||

| 1. P(C|CC, CC) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe better as (or something similar to):

Based on the 16 conditional probabilities P(X | I,J,K,L) where X, I, J, K, L in [C, D] and I, J are the players last two moves and K, L are the opponents last two moves.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think I'd prefer them explicitly listed, the ordered isn't clear otherwise?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Unless your suggestion is to use your sentence to clarify what the list is and also list them which is a great call 👍

axelrod/strategies/memorytwo.py

Outdated

| 'manipulates_state': False | ||

| } | ||

|

|

||

| def __init__(self, sixteen_vector: Tuple[float, float, float, float, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could use Tuple[float, ...] for brevity but it wouldn't enforce length 16

axelrod/strategies/memorytwo.py

Outdated

|

|

||

| def set_initial_sixteen_vector(self, sixteen_vector): | ||

| if sixteen_vector is None: | ||

| sixteen_vector = (1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

tuple([1] * 16)

axelrod/strategies/memorytwo.py

Outdated

| to cooperate after rounds with mutual cooperation (provided the last k actions | ||

| of the focal player were actually consistent). | ||

|

|

||

| 2.Error correcting. A strategy is error correcting after at most k rounds if, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

missing space in 2.Error

axelrod/strategies/memorytwo.py

Outdated

|

|

||

| 2.Error correcting. A strategy is error correcting after at most k rounds if, | ||

| after any history, it generally takes a group of players at most k + 1 rounds | ||

| to re establish mutual cooperation. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

re-establish

axelrod/strategies/memorytwo.py

Outdated

| to re establish mutual cooperation. | ||

|

|

||

| 3. Retaliating. A strategy is retaliating for at least k rounds if, after | ||

| rounds in which the focal player cooperated while at least one coplayer defected, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

coplayer = opponent ? (if so, I like it)

I think this is supposed to say that the opponent defected while the player was cooperating, as written it makes it seem like there are multiple coplayers.

axelrod/strategies/memorytwo.py

Outdated

| the strategy responds by defecting the following k rounds. | ||

|

|

||

| In [Hilbe2017]_ the following vectors are reported as "equivalent" to AON2 | ||

| with their respective self cooperation rate (note that these a not the same): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

a -> are

self cooperation -> self-cooperation (and elsewhere)

axelrod/strategies/memorytwo.py

Outdated

|

|

||

| AON2 is implemented using vector 1 due it's self cooperation rate. | ||

|

|

||

| In essence is a strategy that starts of by cooperating and will cooperate again |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

it is

off

axelrod/strategies/memorytwo.py

Outdated

| class DelayedAON1(MemoryTwoPlayer): | ||

| """ | ||

| Delayed AON1 a memory two strategy also introduced in [Hilbe2017]_ and belongs to the | ||

| AONk family. Note that AON1 is equivalent to Win Stay Lose Swift. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Swift -> Shift

axelrod/strategies/memorytwo.py

Outdated

|

|

||

| name = 'AON2' | ||

| classifier = { | ||

| 'memory_depth': 2, # Memory-one Four-Vector |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

16 vector. Not sure we need a comment here since it's a memory two strategy by definition, or maybe that's a more useful comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I agree, I don't think the comment is useful here. 👍

axelrod/strategies/memorytwo.py

Outdated

|

|

||

| name = 'Delayed AON1' | ||

| classifier = { | ||

| 'memory_depth': 2, # Memory-one Four-Vector |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

same

| self.assertEqual(len(warning), 1) | ||

| self.assertEqual(warning[-1].category, UserWarning) | ||

| self.assertEqual(str(warning[-1].message), | ||

| "Memory two player is set to default, Cooperator.") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

remove extra space

| 'manipulates_state': False | ||

| } | ||

|

|

||

| def test_class_classification(self): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Does this test have to be explicit? Or is it covered by the generic player tests?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks very nice overall, lots of minor comments, approving since there are no substantial issues

|

Nice work so far :) Thanks for adding these strategies! |

|

Thank you for the feedback @marcharper and thank you both for the review. I believe I addressed everything. |

|

Thanks @Nikoleta-v3, you've addressed all my requests. Will leave it to @marcharper for final ok 👍 |

Closes #1063

A generic class for memory two players, similar to the

MemoryOnePlayerclass.If transition rates are not given the the player is set to default which is Cooperator.

I have added the equivalent tests and a reference from issue #1063.

Moreover, this implements two strategies of interest from #1063:

AON2andDelayed AON1Each strategy has 16 states. I have added tests for most of the states.