-

Notifications

You must be signed in to change notification settings - Fork 29

Test timings on Travis

Unit tests are implemented in R packages as files under the tests/ subdirectory, using built-in functions such as stopifnot or helper packages such as testthat. These unit tests can be used to ensure that package functions provided known inputs yield known outputs. Thus R package developers can use unit tests to avoid introducing bugs in their code.

However, there is currently no easy way to see if package developers are altering the speed of their code. Likewise, there is no easy way to see if the disk/memory usage of functions defined in a package is changing over time.

The idea of this project is to provide a package with functions that makes it easy for R package developers to track quantitative performance metrics of their code, over time.

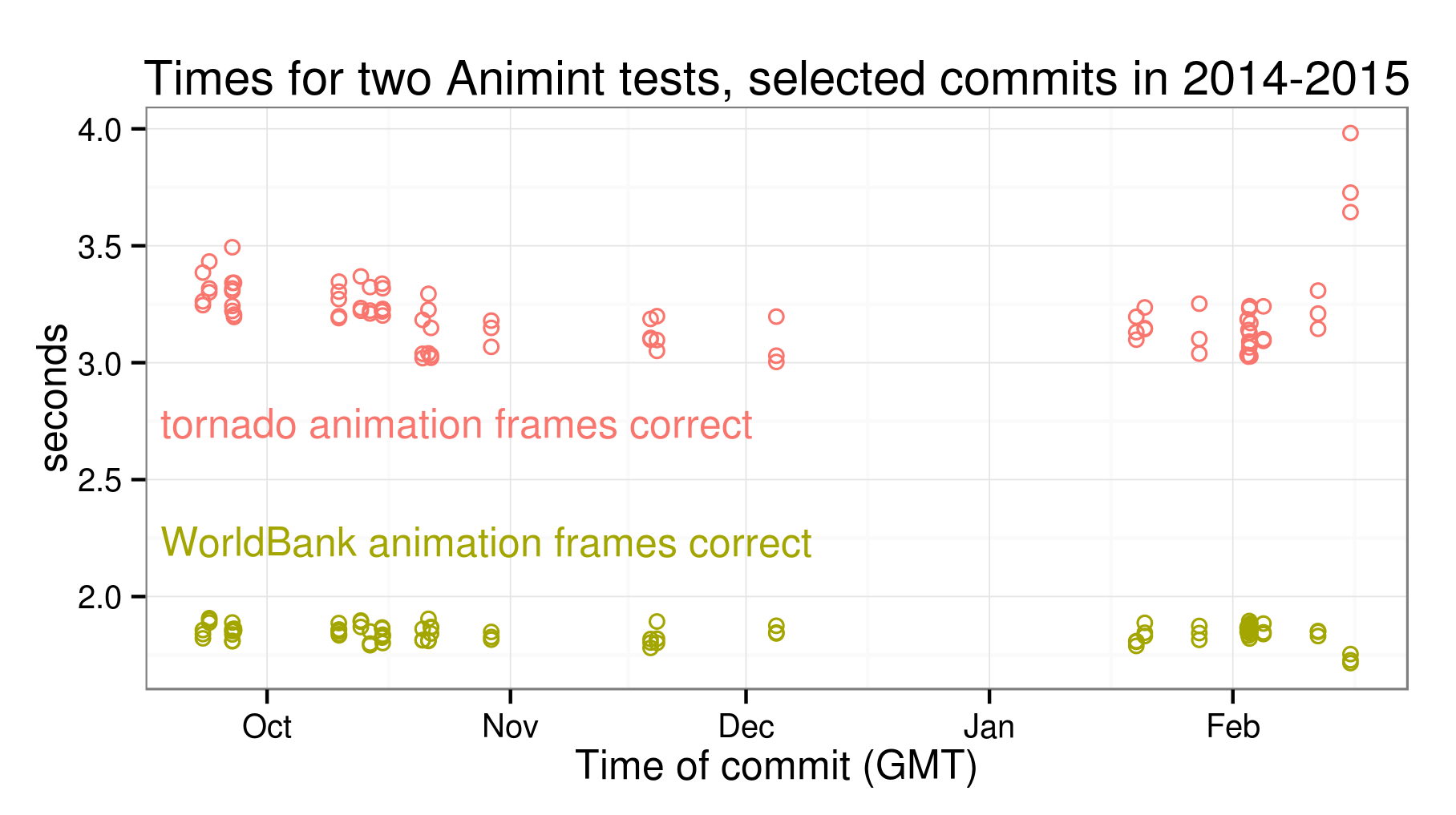

As a proof-of-concept I implemented the testthatQuantity package which I used to time two tests from the Animint package:

The plot above shows that in the most recent commit, the “tornado animation frames correct” test has gotten a little slower. Plots such as this could be used to alert Animint package developers that they may have introduced unwanted changes that resulted in slower code.

The approach of testthatQuantity is to run all test code on the user/developer’s machine. Given an R package in a git repository, it uses git checkout to get lots of different versions of the package, and it runs the tests for each package version.

- Current code runs only the tests in 1 test file. Ideally we would like to quantify timings for all tests/testthat/test-*.R files.

- Current code only does test timings. Ideally we would like to quantify memory/disk usage (possibly using a code profiler, and record if each test passes or fails.

- Maybe have some integration with manual git bisect? For example, we can begin by timing the test for the oldest and newest commits, plot those timings, and then label them as either good=fast or bad=slow. Then we can run git bisect and continue to run timings and make labels until we find the first commit that slowed down.

Integration with r-travis. We could test the code on Travis after every push to GitHub, and then have a web page with the plot be updated automatically when the Travis build finishes. But it may be a bit more complicated to get meaningful timings, since I guess each Travis build machine has a different hardware configuration (CPU speed). Some ideas about how to push files from Travis to GitHub:

- http://sleepycoders.blogspot.ca/2013/03/sharing-travis-ci-generated-files.html

- http://rmflight.github.io/posts/2014/11/travis_ci_gh_pages.html

Build on top of the new testthat reporter framework.

Any interested students should get in touch with co-mentors Toby Dylan Hocking <[email protected]> and Hadley Wickham <[email protected]> as soon as possible, so we can begin work on a more precise project proposal with specific goals and target completion dates.

Students, please post links to your test results below. Doing more tests and more difficult tests makes you more likely to be selected for this project.

- Easy: download testthatQuantity and run the example code on one test file of a package of your choice.

- Medium: edit the

test.filefunction so that it records the pass/fail status of each test. Hint: look attestthat::get_reporter(). - Hard: the current code in

test.fileandtest.commitassumes that the tests specified intfiledo not change across different git commits, which may or may not be true. Implement atest.commits(tfile, SHA1.vec)function which saves the tests intfileand runs them against all commits specified in theSHA1.veccharacter vector.

The repository TravisTests has been provided by Akash Tandon as solutions for the Easy, Medium and Hard test questions.