CatBot: Talk to your cats. Access it here!

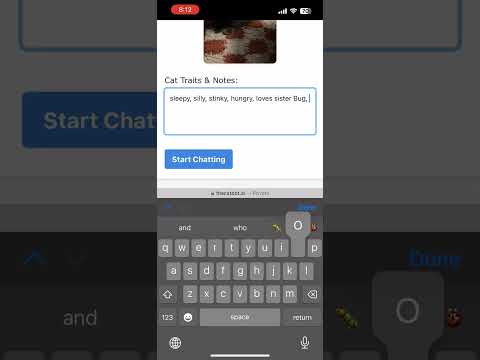

This project is a chatbot that allows you to talk to your cats. 😄

It is a NodeJS app that uses the llama3.2 model to service prompt requests. It uses Docker Hub to pull the app + AI images.

- Run an LLM container

docker run -p 11434:11434 --name model samanthamorris684/ollama@sha256:78a199fa9652a16429037726943a82bd4916975fecf2b105d06e140ae70a1420 dotenv -e .env.dev -- npm run start:dev

- Run

npm test

I used compose to develop this locally.

- Install model on Docker Model Runner:

docker model pull ai/llama3.2 - Note: This uses the

DMRflag in theenv.composefile to interact with the Open AI API call and llama.cpp server docker compose up --build- When done,

docker compose down

- Build:

docker build -f ollama/Dockerfile -t ollama_model --platform=linux/amd64 . - Run:

docker run -d --platform=linux/amd64 ollama_model

- Set up an EKS cluster. I followed this tutorial.

kubectl apply -k out/overlays/desktop

-

I had to rebuild for AMD when the image was not able to be pulled by the pod. It should know to pull the AMD image build.

-

The default image size for your nodes is m5.large. Increase your resource requests as needed.

-

Create a node group for the model containers with the taint and labels set correctly (to model=true)

-

Set up your Route 53 by pointing the alias to the K8s cluster public domain, and request a certificate and put the CNAME name and values into Route 53. Additionally, add your pre-generated nameservers to the domain service DNS tab.

- Switch kube contexts when working with MiniKube vs. EKS. Get contexts by running

kubectl config get-contextsand swtich by running:kubectl config use-context {NAME}